TheInterviewTimes.com | February 15, 2026 | 11:30 PM (IST) | New Delhi

AI Content Laws: India mandates a 3-hour deadline for social media platforms to remove unlawful and AI-generated content under amended IT Rules 2021, tightening deepfake and misinformation controls.

The Government of India has introduced sweeping amendments to its digital regulations, mandating that major social media platforms remove flagged unlawful content within three hours of receiving official orders. The move sharply reduces the earlier 36-hour compliance window and signals a tougher stance against misinformation and AI-generated harmful content, including deepfakes.

The amendments to the Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules, 2021 were officially notified by the Ministry of Electronics and Information Technology on February 10 and will come into force from February 20, 2026.

What Has Changed in the IT Rules?

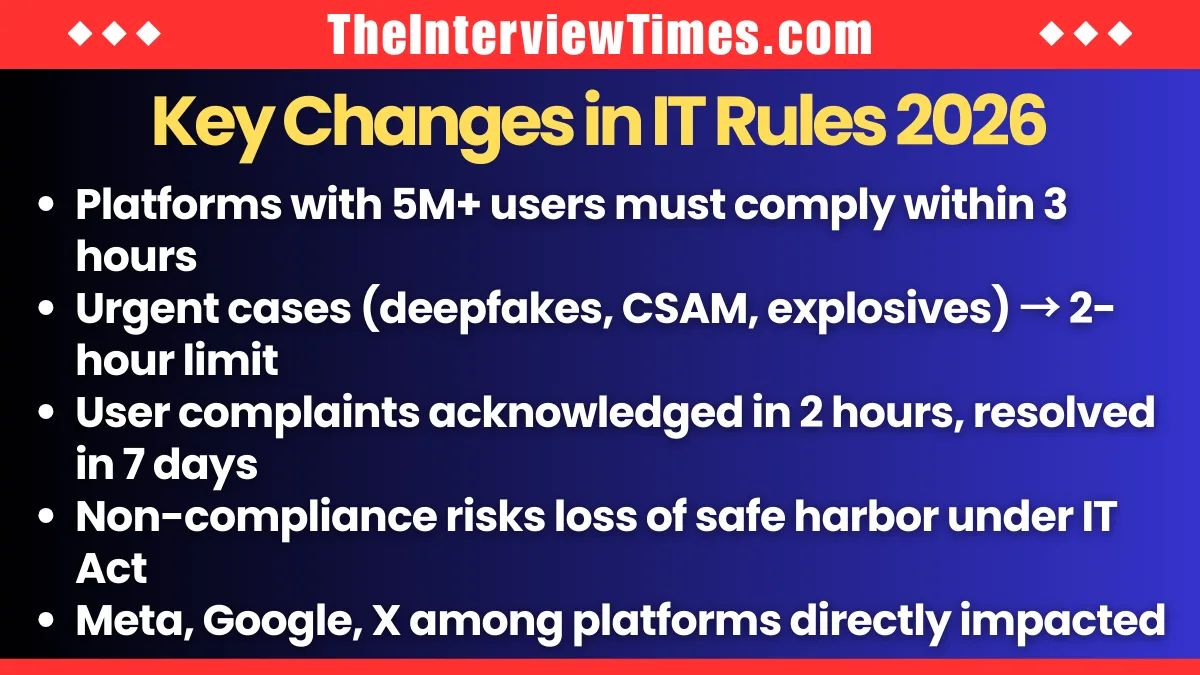

The revised framework applies to “significant social media intermediaries,” defined as platforms with over 5 million users in India. This includes companies such as:

- Meta (Facebook and Instagram)

- Google (YouTube)

- X (formerly Twitter)

Under the updated rules:

- Platforms must remove government-flagged unlawful content within three hours.

- In urgent categories such as non-consensual intimate imagery, child sexual abuse material, deepfake impersonation, or content linked to explosives, compliance time may be reduced to two hours.

- User complaints must be acknowledged within two hours and resolved within seven days.

Failure to comply could result in platforms losing safe harbor protections under Section 79 of India’s IT Act, exposing them to legal liability.

Focus on AI-Generated and Synthetic Content

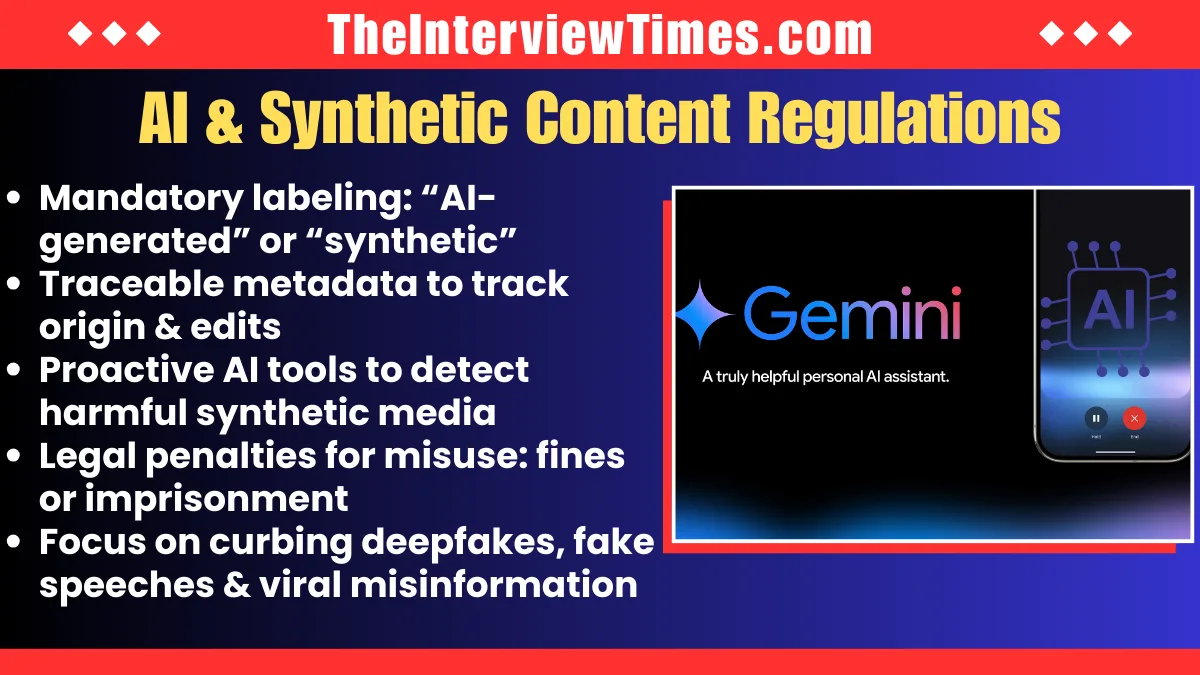

A key feature of the amendment is its explicit coverage of synthetically generated information (SGI), including AI-created audio, video, and images.

Platforms are now required to:

- Mandatorily label AI-generated posts with clear tags such as “AI-generated” or “synthetic.”

- Embed traceable metadata or provenance data to identify content origin and modifications.

- Deploy proactive AI tools to detect and block harmful synthetic media.

- Warn users about legal penalties, including fines or imprisonment, for misuse of AI-generated content.

The government’s move follows growing concerns over deepfake videos impersonating public figures, fabricated political speeches, and viral misinformation campaigns. With India hosting over one billion internet users, authorities argue that rapid-response systems are necessary to prevent social unrest and electoral manipulation.

Government’s Rationale

According to the official notification by MeitY, the reforms aim to “foster accountability and protect citizens from deceptive digital content.” Officials stress that the shortened deadline reflects the urgent threat posed by generative AI technologies that can create realistic fake content within minutes.

India has previously issued thousands of takedown requests annually. In the first half of 2025 alone, Meta reportedly restricted over 28,000 content items in India following government directives.

The new three-hour rule is seen as a preventive measure to ensure quicker intervention before harmful content spreads widely.

Industry and Free Speech Concerns

The reforms have sparked debate among legal experts and digital rights advocates.

Critics argue that a three-hour compliance window may be operationally challenging, given the scale of content uploaded daily. Platforms may increasingly rely on automated AI moderation systems, raising fears of:

- Over-censorship

- Mistaken removal of legitimate political speech

- Chilling effects on free expression

Policy think tanks have warned that the tighter deadlines could significantly increase compliance costs and pressure platforms to err on the side of removal to avoid legal risk.

There are also concerns that creators may begin self-censoring on sensitive topics such as politics, religion, or public policy to avoid takedown risks.

Global Implications

With these amendments, India positions itself among the most stringent jurisdictions globally on AI and digital content regulation. The move may influence future global debates on AI governance, especially in large democracies grappling with misinformation.

Social media companies are reportedly strengthening India-based compliance teams and upgrading AI detection tools ahead of the February 20 enforcement date. Legal challenges from industry bodies or civil society groups remain possible, but as of now, the rules are set to be implemented.

The Bigger Picture

India’s new three-hour takedown mandate reflects a decisive shift toward rapid digital enforcement in the AI era. While the government frames it as a necessary step to protect users from deepfakes and harmful misinformation, critics caution against unintended consequences for free speech and innovation.

As artificial intelligence continues to evolve, the balance between digital safety and freedom of expression is likely to remain at the center of India’s technology policy debates.

Top News: Macron Arrives in India for Strategic Talks and Global AI Impact Summit 2026